Facebook

Twitter

LinkedIn

When purchasing decisions worth millions depend on your B2B research findings, data quality isn’t optional—it’s essential. Effective screening processes are your first line of defense for quality data, yet they present a challenging paradox: screen too rigorously, and you risk disengaging valuable respondents; screen too loosely, and your data integrity suffers. For research directors and insights managers, balancing what you screen for and how is the key to collecting data only from the right respondents while ensuring their engagement and cooperation.

Creating surveys for B2B professionals presents a unique set of challenges. From C-suite executives to specialized decision-makers, these sought-after respondents often command higher incentives than general population targets. The challenge lies in crafting screening questions that validate professional credentials and test for accurate subject knowledge, while maintaining engagement and ensuring authentic responses. Done right, you avoid issues such as:

B2B survey screening needs balance. You want thorough screening for quality data, but you must also keep respondents interested from the first question. Good screening helps protect your data quality and is worth getting right.

Here are the key principles we’ve learned through working with B2B audiences:

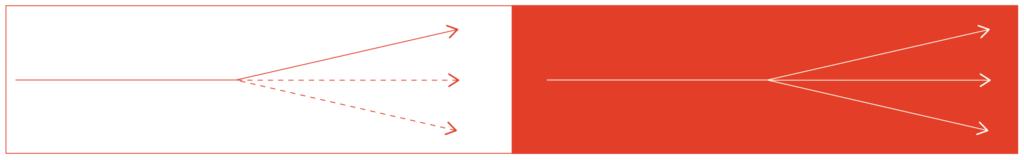

Start broad and narrow down your questions. This keeps people engaged while naturally filtering out unqualified respondents.

Example: When screening senior business decision-makers:

Ask questions that need real industry knowledge but aren’t easily searchable online. Focus on day-to-day work scenarios a qualified respondent would experience rather than technical definitions.

Example: Instead of asking, “What is zero-trust security?” try asking, “Which steps has your organization taken when implementing zero-trust security?” Someone involved in the process can easily describe or identify the correct steps, while those trying to game the system will struggle to provide authentic details.

Write screening questions that don’t hint at the “right” answer.

Example: Instead of “Are you responsible for making final decisions about IT security purchases?”, ask, “How does your organization typically handle IT security purchase decisions?”

Ask about company structure and processes that employees would know, keeping questions broad enough to apply to different organizations.

Example: Structure the question like this… “In your organization’s typical technology buying process, which departments need to approve purchases over $100,000?” This checks organizational knowledge while working across different company structures.

Include options in multiple-choice questions that might catch people trying to guess their way through.

Example: When asking about enterprise software, include one plausible but fake option among the real ones. Using brand names that a respondent who has the right experience and responsibilities would know well, along with one not-obvious made-up choice, will flag those selecting the fake option readily.

Now that we’ve covered the key principles of effective B2B screening, let’s look at how these practices come together in day-to-day research. The success of your B2B research heavily depends on how well you execute your screening strategy in three areas:

Use multiple verification layers to authenticate respondents before they enter your survey. Digital fingerprinting eliminates duplicates, IP verification confirms location accuracy, and email verification adds authentication.

Example: When screening IT decision-makers, our system first checks that the respondent’s IP matches their stated location, verifies their email domain against their claimed company size (enterprise emails vs. personal accounts), and uses digital fingerprinting to ensure they haven’t already completed the survey under different credentials.

Structure your screening questions to follow natural business processes, moving from general to specific while maintaining a logical flow that matches real workplace hierarchies.

Example:

This sequence feels natural to qualified respondents while efficiently filtering out those without relevant experience.

Cross-reference answers throughout the screening process to catch inconsistencies and flag potential quality issues before they impact your data.

Example: If a respondent claims to handle “$5M+ software budgets” but works at a small nonprofit, your validation system should flag this inconsistency.

When your screening combines the right technology, strategic response validation, and thoughtful question design, you can gather reliable B2B data. These pieces work hand in hand – smart questions catch inconsistencies, validation keeps responses on track, and technology provides the backbone for quality control.

Our clients see a consistent reduction of disqualified respondents – 30-40% is typical. Removing those through effective screening provides much less noise and bad data in their results.

While these screening practices create a strong foundation for quality B2B research, turning them into consistent, reliable results requires expertise and the right partnership.

Over two decades, we’ve refined these methodologies through thousands of successful projects across industries. Our approach combines rigorous screening with efficiency, helping reduce project timelines by 25-35% while maintaining the highest quality standards. When you work with Quest Mindshare, you’re not just accessing a panel – you’re partnering with a team that has seen what works—and what doesn’t—after 20+ years in B2B data collection.

This checklist walks you through key criteria for assessing panel providers, from their basic quality controls to advanced fraud prevention measures.

In today’s AI-infiltrated market research landscape, data integrity isn’t just a benchmark—it’s a business imperative. Clients rely on insights that guide critical decisions, and anything less than high-integrity data threatens both the credibility of the research and the outcomes it’s meant to inform. Yet maintaining that integrity is harder than ever, thanks in part to the increasing […]

Read ArticleResearch is a science. But it’s a SOCIAL science, and that means humans are involved! Researchers like to be perfectionists, which can be good for data analysis, but when it comes to getting the best data to analyze, you first have to interact with those humans to get their feelings, thoughts, needs, wants, ideas, and […]

Read ArticleBalancing tight deadlines against quality demands creates constant pressure for researchers. You need clean data that you can trust without spending hours on verification when you should be preparing your report. At Quest Mindshare, we’ve spent over 20 years perfecting this balance, particularly in the complex B2B space where finding reliable respondents can feel like searching […]

Read Article